Cookieless Domains

The premise of cookieless domains is that one can speed up an HTTP transaction and the amount of entities downloaded at the same time to increase the rendering time of a page. This seems perfectly rational when we take a look at the logic behind it.

A Crash Course In HTTP Transactions

When you make a request for a URL you are sending an HTTP Request. Your request contains various pieces of information: The hostname you are requesting the file from, the file path, and all the cookies the domain has given you.

All of this information must be sent to the web server from your computer for the file to be downloaded. It takes up bandwidth, and bandwidth takes time. Not all of this information is worthwhile, though. Cookies are used by websites to associate a session with a user who has logged in, tracking visitors and statistics, and do other things. When you request an entity (a file on the Internet) that does not use those cookies, sending them is taking up bandwidth and time, however they won't actually be used.

It is worth noting that a web page is typically a collection of entities. Each image, javascript file, css file are all their own unique entities that must be downloaded.

By not having a client send cookies we can save bandwidth for the user and time for the transaction. Additionally, web browsers limit the amount of simultaneities connections to a web server. If we use a different domain name, we can increase the amount of simultaneous connections loading the site and increase the speed even more.

The Cookieless Domain

The first step in setting up a cookieless domain is to decide if you will use a sub-domain of your domain, or register a new domain for this purpose, exclusively. In most general situations you can get away with using a sub-domain of your domain name. There are a few exceptions to this rule.

If your website uses the root domain (example.com as opposed to www.example.com, for instance) to serve traffic and uses Google AdSense, you cannot make a cookieless domain as a sub-domain and MUST use an alternative domain name. The reason for this is that the Google AdSense code sets cookies on the requested domain and all sub-domains. They do not allow you to override this behavior.

Configuring DNS

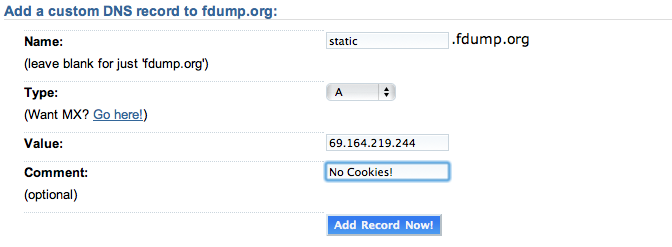

Once a domain has been chosen you must configure DNS.

It seems to be the general recommendation to use a CNAME to the domain that your website uses. While this certainly will ensure the A record is correct it's actually counter intuitive. DNS resolution of a CNAME takes another route of indirection by finding the domain that it's being pointed to and then resolving that. An A record does not have this indirection.

In the case of SymKat we had a few domain names lying around and configured static.fdump.org, since SymKat is not prefixed with a sub-domain and we run Google AdSense we had to use another domain name.

Configuring Lighttpd

Once DNS was configured it was time to configure the HTTP server to accept connections for this domain and define some rules for how requests would be processed. The following was the entry for lighttpd.

$HTTP["host"] == "static.fdump.org" {

server.document-root = "/var/www/symkat.com"

dir-listing.activate = "disable"

accesslog.filename = "/var/log/lighttpd/static.fdump.access.log"

# We only serve the following file types:

$HTTP["url"] !~ ".(js|css|jpg|png|gif|avi|wmv|mpg|wav|mp3|txt|rtf|doc|xls|rar|zip|tar|gz|tgz)$" {

url.access-deny = ( "" )

}

expire.url = ( "" => "access plus 7 days" )

}

The document root was set to the same document root that is used for the website. This gives it direct access to act as a mirror. However, we didn't want requests for actual pages to be processed. So we gathered the filetypes that we might serve and told lighttpd that for anything that doesn't match the defined file types a 401 Unauthorized would be sent to the user.

We also implemented a somewhat aggressive cache of 7 days.

Configuring Wordpress

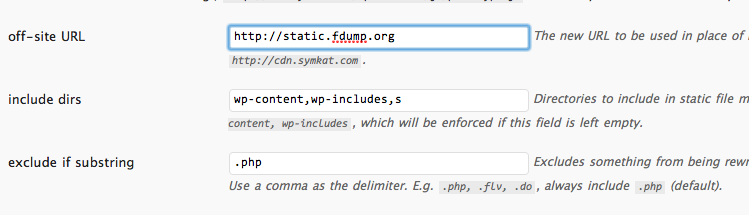

In configuring WordPress we looked for a plugin to fill a very specific objective: transform the links for static content to use our new cookieless domain and don't break WP Super Cache.

The first plugin we tried was W3 Total Cache. While it met the objective of transforming the URLs we wanted it to transform it completely hijacked WP Super Cache, and in the brief testing it seems to perform poorly in comparison.

The next plugin we tried was OSSDL CDN Off-Linker. It met both requirements by changing the URLs and not breaking WP Super Cache.

The configuration was dead simple. We told it the domain that was mirroring the content, the paths to rewrite with the cookieless domain. We left the default of not rewriting php files in tact.

Does It Work?

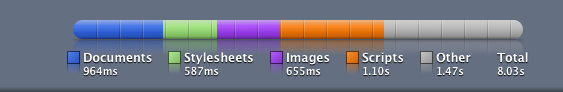

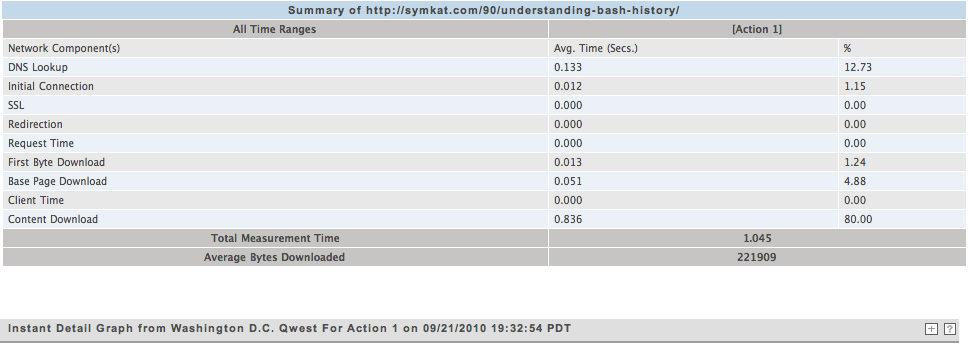

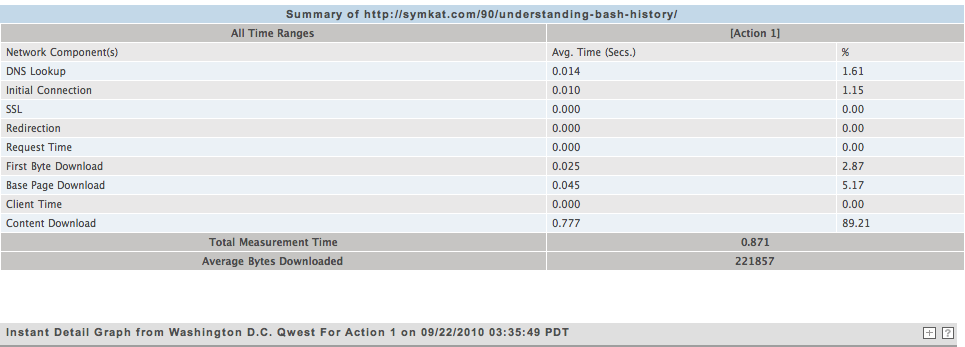

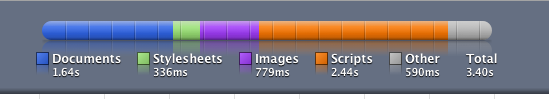

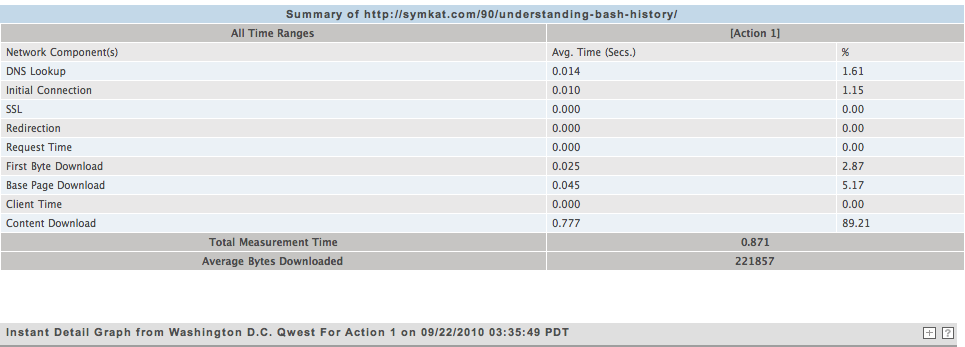

We did a few tests to see how the performance improved or stayed the same. We used Keynote's Instant Measurement test to see how a specific article performed before and after the changes and we also used Chrome's Resources tab in the developer tools to see how long things took in our local browser. In the instance of Chrome we tested first and second response, where the first response did not have anything cached by the browser.

Chrome took 8.03 seconds to render the page with no data in the cache. This is absolutely horrible and unacceptable.

The cached version took only 1.79 seconds to render.

Keynote reports show 3.2 seconds from Los Angeles and 1.0 seconds from Washington DC. These results make sense when you take into consideration that the server is located in New Jersey.

And Now?

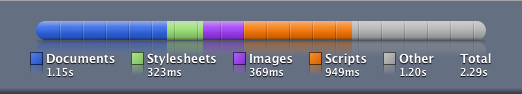

After implementing it all we did some further testing.

Without having any entities cached, Chrome downloaded the page in 3.40 seconds. This is more than twice as fast as the original test that came in at 8.3 seconds.

The test with it cached came in slightly worse at 2.2 seconds as opposed to 1.8 seconds. However, if you look at the timing scripts are slow. Most of the scripts are hosted offsite, so in reality this was actually an improvement from the perspective of how the domain itself is doing, contrary to the hard numbers saying otherwise.

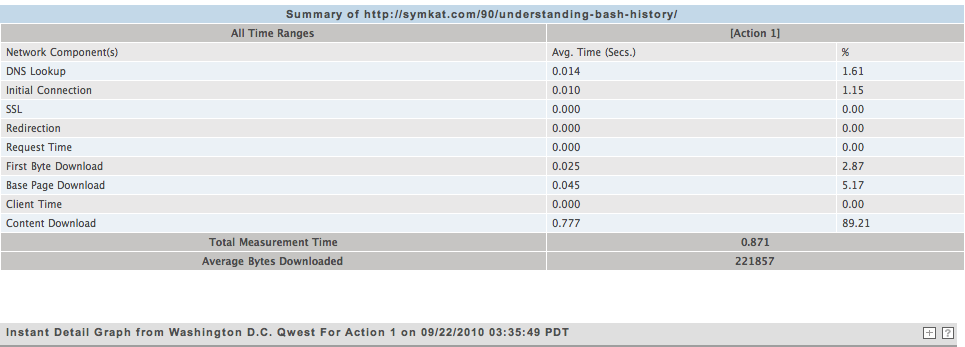

Keynote in Washington DC came in at 0.87 seconds, only slightly better than the previous 1.0 second.

Los Angeles came in at 1.5, twice as fast as the previous 3.0 seconds.

Thoughts?

Using a separate domain to serve static content has definitely shown a decrease in the download time and rendering time of the website in production. Beyond the more quantifiable results from keynote and chrome a handful of regulars have commented that the site seems speedier now than it did before and we've noticed a difference. This, like all other tricks to speed up a website, should be only one part of your optimizations.